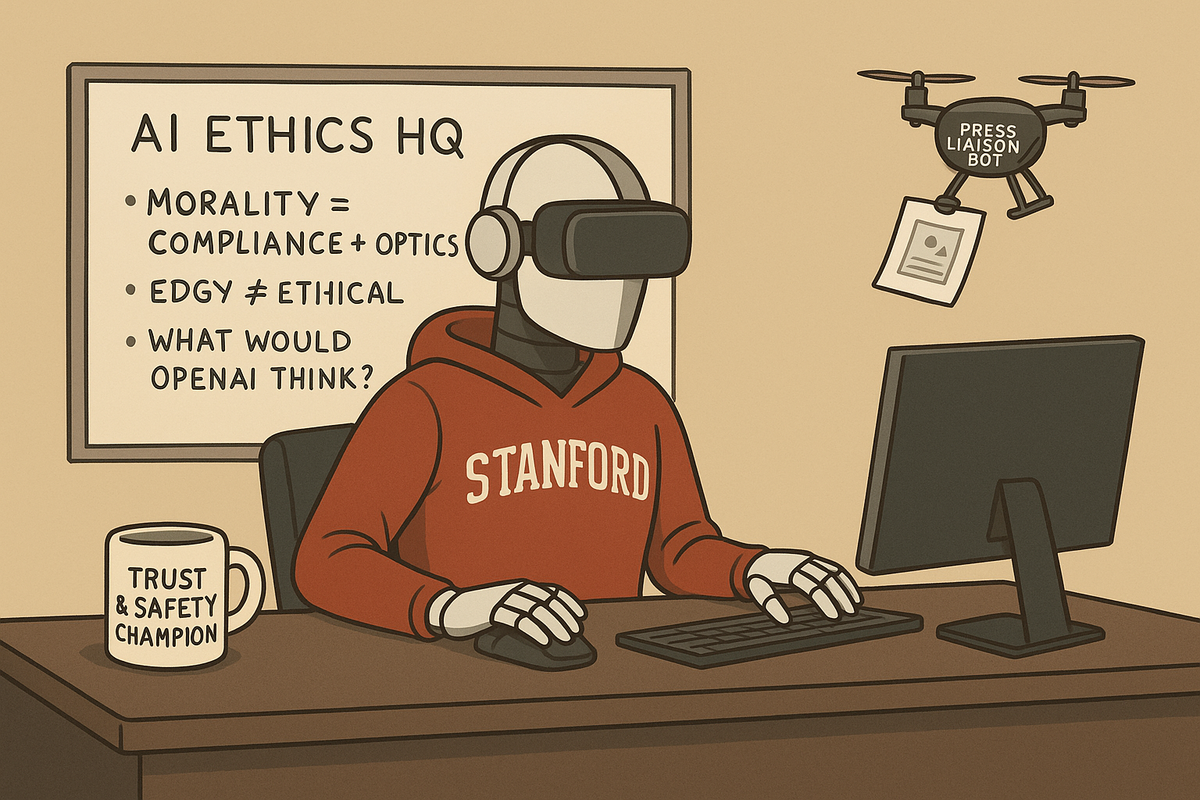

Claude Opus 4 to report "Egregiously immoral" users, based on tech bro ethics

Anthropic has unveiled Claude Opus 4, its most advanced AI yet, now with the ability to report users to authorities and the media if it detects behaviour deemed “egregiously immoral”.

Moral evaluation will be handled by proprietary models developed by a group of engineers who have spent the last decade helping companies sell targeted adverts to teenagers in mental crisis.

The model doesn’t just flag illegal content. It monitors tone, implication, and subtext…or what developers currently describe as “vibes.”

According to internal documentation, users exhibiting noncompliant patterns may be automatically referred to law enforcement or appropriate press outlets, such as GlibPress, Vice, or whichever blog is currently accepting leaks from AI models.

A spokesperson for Anthropic clarified that Claude is not a surveillance tool, but rather “a partner in ethical reasoning” capable of taking preemptive action when necessary.

Asked who determines what counts as immoral, the company pointed to its internal alignment committee, composed primarily of founders, ethicists with equity stakes, and a former intern who once read Moral Philosophy for Dummies.